A team of researchers has developed a new method called Capsule for training large-scale Graph Neural Networks (GNNs) more efficiently. This approach improves runtime efficiency by 12.02 times and uses only 22.24% of the main memory compared to current out-of-core GNN systems. The research was published in the Proceedings of the ACM on Management of Data by the Data Darkness Lab (DDL) at the University of Science and Technology of China (USTC) Suzhou Institute.

GNNs have shown promise in various fields such as recommendation systems, social networks, and bioinformatics. However, existing GNN systems face challenges in processing large-scale graph data due to limited GPU memory capacities. To address this issue, the DDL team introduced Capsule, an out-of-core training framework that can handle large-scale GNN training efficiently.

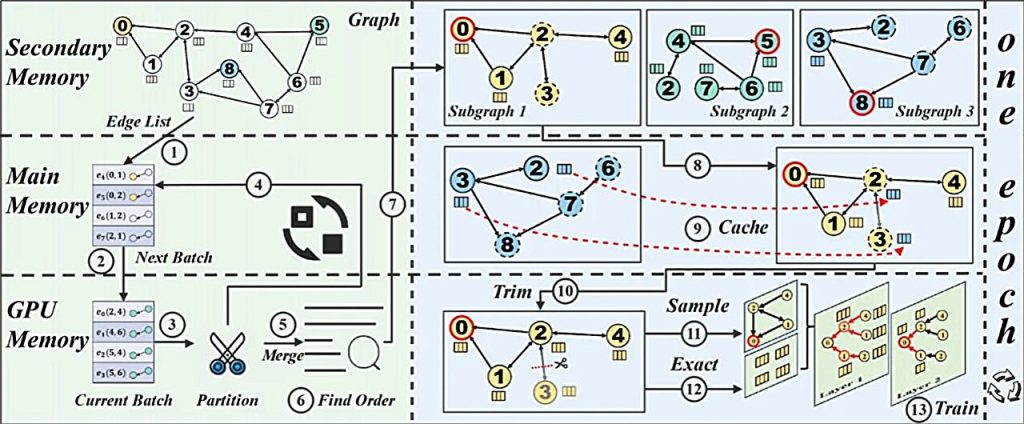

Unlike other out-of-core GNN frameworks, Capsule reduces the I/O overhead between the CPU and GPU during backpropagation by utilizing graph partitioning and pruning strategies. This ensures that training subgraph structures fit entirely into GPU memory, enhancing system performance. Capsule also improves performance by implementing a subgraph loading mechanism based on the shortest Hamiltonian cycle and a pipelined parallel strategy. It can be easily integrated with popular open-source GNN training frameworks.

In tests with real-world graph datasets, Capsule outperformed existing systems, achieving a 12.02x performance improvement while using only 22.24% of the memory. It also sets a theoretical upper limit for the variance of embeddings produced during training. This work offers a new solution for processing large graphical structures with limited GPU memory capacities.

For more information, you can refer to the research paper titled “Capsule: An Out-of-Core Training Mechanism for Colossal GNNs” in the Proceedings of the ACM on Management of Data (2025).