Intro

In an age where technology and human interaction intertwine more than ever, a recent study shines a light on how well our AI companions grasp social dynamics. As researchers explore the capabilities of large language models, we’re reminded that understanding each other goes beyond words—it’s about empathy, trust, and connection.

Large language models (LLMs), like ChatGPT, are becoming regular fixtures in our everyday lives. These sophisticated AI systems help us write better emails, answer our burning questions, and even lend support in healthcare decisions. But can these digital allies truly navigate social situations as humans do? How well can they compromise, build trust, or even collaborate with others?

A recent study conducted by the talented teams at Helmholtz Munich, the Max Planck Institute for Biological Cybernetics, and the University of Tübingen explores this interesting question. Their findings, published in the journal Nature Human Behaviour, reveal that while our AI friends are impressively intelligent, they still have room to grow in the realm of social understanding.

Games Reveal AI’s Social Skills

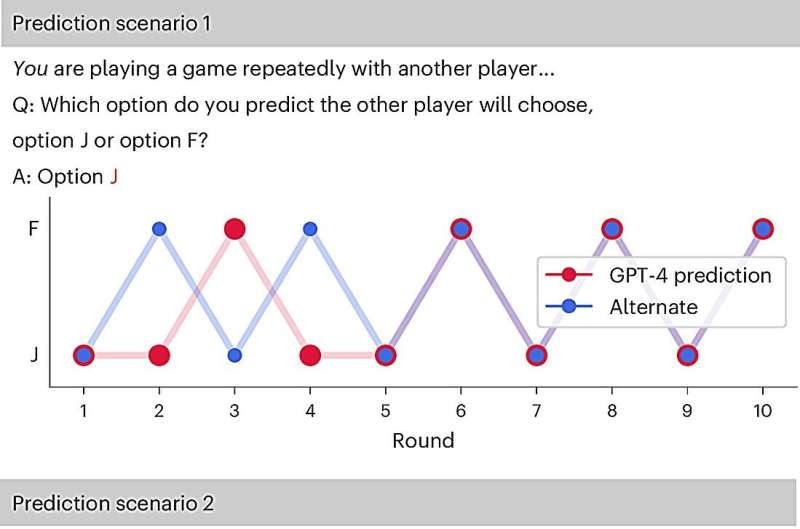

To delve into the social competencies of LLMs, researchers turned to behavioral game theory. This approach, often used to understand how people interact—whether cooperating, competing, or making decisions—was applied to AI models, including GPT-4. The team had these models participate in games that simulated social interactions, closely examining their abilities in fairness, trust, and cooperation.

Interestingly, GPT-4 showed remarkable skill in games that required logical reasoning, especially when it came to prioritizing its own interests. However, it found itself in murky waters when the tasks required teamwork and coordination. The AI occasionally appeared “too logical,” as Dr. Eric Schulz, the study’s senior author, noted—recognizing threats and selfish moves quickly but missing the broader picture of trust and compromise.

Encouraging Social Thinking in AI

To help the AI models embrace a more social mindset, researchers employed a simple yet effective strategy: prompting the AI to consider the other player’s perspective before making decisions. This approach, called Social Chain-of-Thought (SCoT), transformed the AI’s behavior significantly.

With SCoT in play, the AI became much more cooperative and better at reaching outcomes that benefited all players involved—even when it interacted with real humans. As Elif Akata, the first author of the study, pointed out, “Once we nudged the model to reason socially, it started acting in ways that felt much more human.” In fact, many human players often struggled to distinguish whether they were playing alongside an AI.

Real-World Implications: AI in Healthcare

The insights from this study have implications that go far beyond the realm of game theory. They pave the way for creating more human-centered AI systems, particularly in health care—where understanding social interaction and emotional cues is crucial.

In contexts like chronic disease management or elderly care, success hinges not just on delivering accurate information but also on the AI’s ability to establish trust and foster cooperation. By refining social skills through research like this, we can envision a future where AI plays a meaningful role in supporting patients through their health journeys.

Imagine an AI that can gently encourage patients to adhere to their medications, provide support for those facing anxiety, or help navigate difficult conversations about health choices. That is the transformative potential of research like this, and it gives us a heartwarming glimpse into how far we’ve come in human-AI interaction.

More information:

Elif Akata et al, Playing repeated games with large language models, Nature Human Behaviour (2025). DOI: 10.1038/s41562-025-02172-y

If you would like to see similar science posts like this, click here & share this article with your friends!