In critical fields like healthcare or during a game of “Jeopardy!”, sometimes it’s wiser to admit uncertainty than risk giving a wrong answer. People like doctors or game show players recognize this, but many AI systems still prefer to respond even if their answers might be incorrect.

Researchers at Johns Hopkins University believe they’ve found a way to improve this: a new approach that allows AI to think longer about problems. This method provides a score that guides the AI on when to say “I don’t know” instead of risking a wrong response—an important improvement for fields such as medicine, law, and engineering.

The study is published on the arXiv preprint platform, and the team will share their results at the 63rd Annual Meeting of the Association for Computational Linguistics, scheduled for July 27 to August 1 in Vienna, Austria.

“Our journey began when we noticed that powerful language models took more time to solve complex problems,” says William Jurayj, a doctoral student in computer science involved in the study. “We wondered whether this extra thinking time could also help the models figure out if they had solved a problem correctly so they could tell the user.”

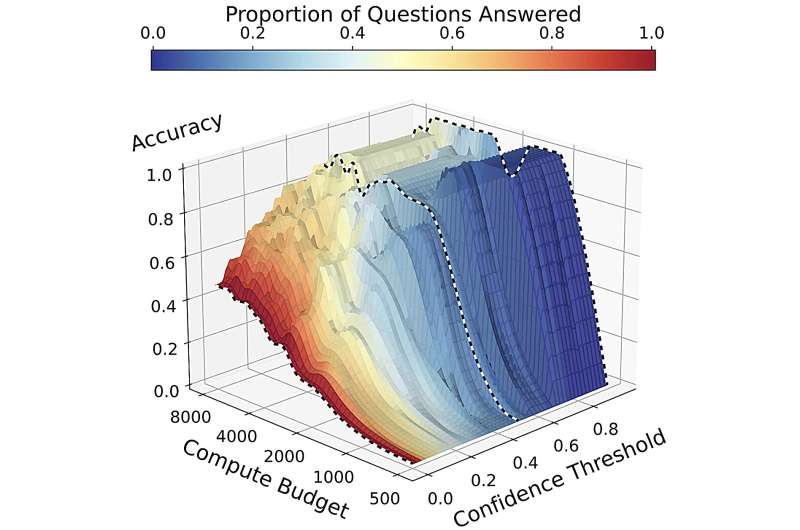

To explore this idea, the researchers had advanced language models generate reasoning steps of varying lengths while tackling challenging math problems. They measured how the length of these reasoning processes impacted the models’ final answers and their confidence in those answers. The models were instructed to respond only when their confidence surpassed a certain threshold, which allowed the option to say “I don’t know.”

They found that taking more time to think generally increased the models’ accuracy and confidence. However, even with extra time, the models sometimes guessed wildly or made errors, particularly when there were no penalties for incorrect answers. Interestingly, the researchers observed that with stringent confidence requirements and extended thinking time, the models’ accuracy could actually decline.

“This situation arises because answer accuracy is just a part of how these systems perform,” Jurayj clarifies. “When high confidence is required, a longer thinking time may lead to both more correct and more incorrect responses. In certain contexts, the extra correct answers can be beneficial, but in critical settings, this isn’t always acceptable.”

Motivated by these findings, the team proposed three different “odds” scenarios to impose penalties on wrong answers: exam odds (no penalties), “Jeopardy!” odds (correct answers rewarded equally to penalties for incorrect ones), and high-stakes odds (where penalties for incorrect answers are more severe than rewards for correct answers).

Under stricter odds, they discovered that a model should refrain from answering if it didn’t feel confident enough after using up its computation resources. Consequently, this means that in situations demanding higher confidence, more questions may go unanswered—but this can be a positive outcome.

“A student might find it frustrating to wait for 10 minutes only to realize they have to solve a math problem themselves because the AI is unsure,” Jurayj says. “However, in high-stakes situations, it’s far better to wait for an uncertain answer than to receive one that appears correct but actually isn’t.”

The team encourages the broader AI research community to evaluate their models based on performance in settings with penalties for incorrect answers, hoping this will foster advancements in developing AI that better manages uncertainty.

“We invite everyone to participate in reporting performance under these non-zero penalty conditions, as this will encourage the creation of improved methods for measuring uncertainty,” Jurayj adds.

More information:

William Jurayj et al, Is That Your Final Answer? Test-Time Scaling Improves Selective Question Answering, arXiv (2025). DOI: 10.48550/arxiv.2502.13962

If you would like to see similar Tech posts like this, click here & share this article with your friends!