In recent years, advancements in machine learning have led to the creation of highly sophisticated models that excel at various tasks. Among these are multimodal large language models (MLLMs), capable of processing and generating different kinds of data, primarily text, images, and videos.

Models like OpenAI’s GPT-4 with Vision, DeepSeek-R1, and Google Gemini are now popular for creating various types of multi-modal content, from social media images to specialized texts.

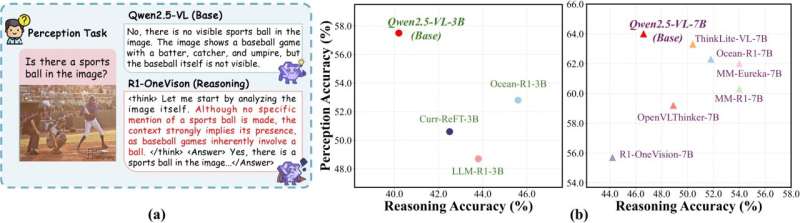

Although these models have come a long way, enabling them to tackle mathematical and logical reasoning challenges, research highlights that they can sometimes generate information that doesn’t match the input, such as describing nonexistent aspects of an image.

These inaccuracies, or “hallucinations,” arise from biases and language patterns the models learned during training on large text datasets. These biases can overshadow the visual data they process, affecting their performance.

A team of researchers from UC Santa Cruz, Stanford University, and UC Santa Barbara has developed new tools for analyzing these hallucinations, focusing on how reasoning abilities in MLLMs relate to their susceptibility to errors when interpreting images. Their findings were published in a paper on the arXiv preprint server, which could aid future improvements in MLLMs.

“Advancements in test-time computation have enabled multimodal large language models to produce complex reasoning paths, leading to impressive performance on tasks like multimodal math reasoning,” the researchers wrote.

However, they also noted that this enhanced reasoning often leads to more hallucinations. As the length of reasoning increases, models may stray from factual information and rely more on learned language patterns.

The research team first examined how well MLLMs handle complicated reasoning tasks and found that longer reasoning sequences tend to increase the likelihood of hallucinations. This occurs as models pay less attention to visual information and depend more on prior language knowledge.

“Our attention analysis indicates that longer reasoning sequences lead to less focus on visual data, which contributes to hallucinations,” the researchers noted.

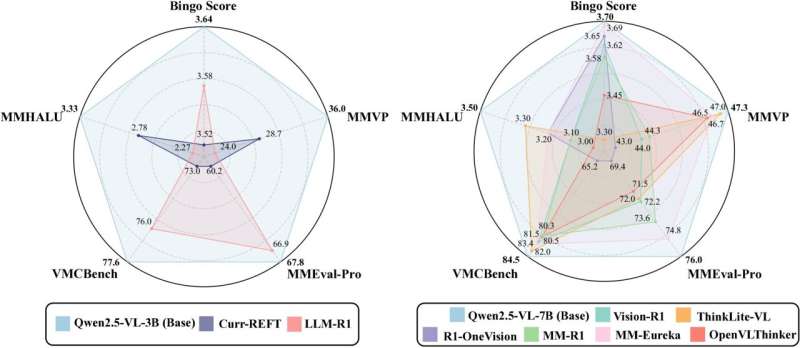

To analyze this issue more effectively, they introduced a new metric called RH-AUC, which measures how perception accuracy changes with the length of reasoning. This will help assess whether models maintain visual grounding during reasoning. They also created RH-Bench, a diagnostic benchmark that covers various multimodal tasks to evaluate the balance between reasoning capacity and hallucination risks.

These new metrics, RH-AUC and RH-Bench, may soon assist other researchers in exploring the connection between MLLM reasoning skills and hallucinations. The insights from their study could help guide future developments aimed at creating models capable of addressing complex reasoning tasks without major errors.

“Our findings reveal that larger models often find a balance between reasoning and perception, which is more influenced by the types of training data than its quantity,” the researchers added. “This highlights the need for evaluation frameworks that consider both reasoning quality and the accuracy of perception.”

Written for you by our author Ingrid Fadelli, edited by Gaby Clark, with fact-checking and review by Robert Egan. This article represents thorough human effort. We depend on readers like you to sustain independent science journalism. If this reporting matters to you, please consider a donation (without ads as a thank-you).

Learn more:

Chengzhi Liu et al, More Thinking, Less Seeing? Assessing Amplified Hallucination in Multimodal Reasoning Models, arXiv (2025). DOI: 10.48550/arxiv.2505.21523

If you would like to see similar Tech posts like this, click here & share this article with your friends!